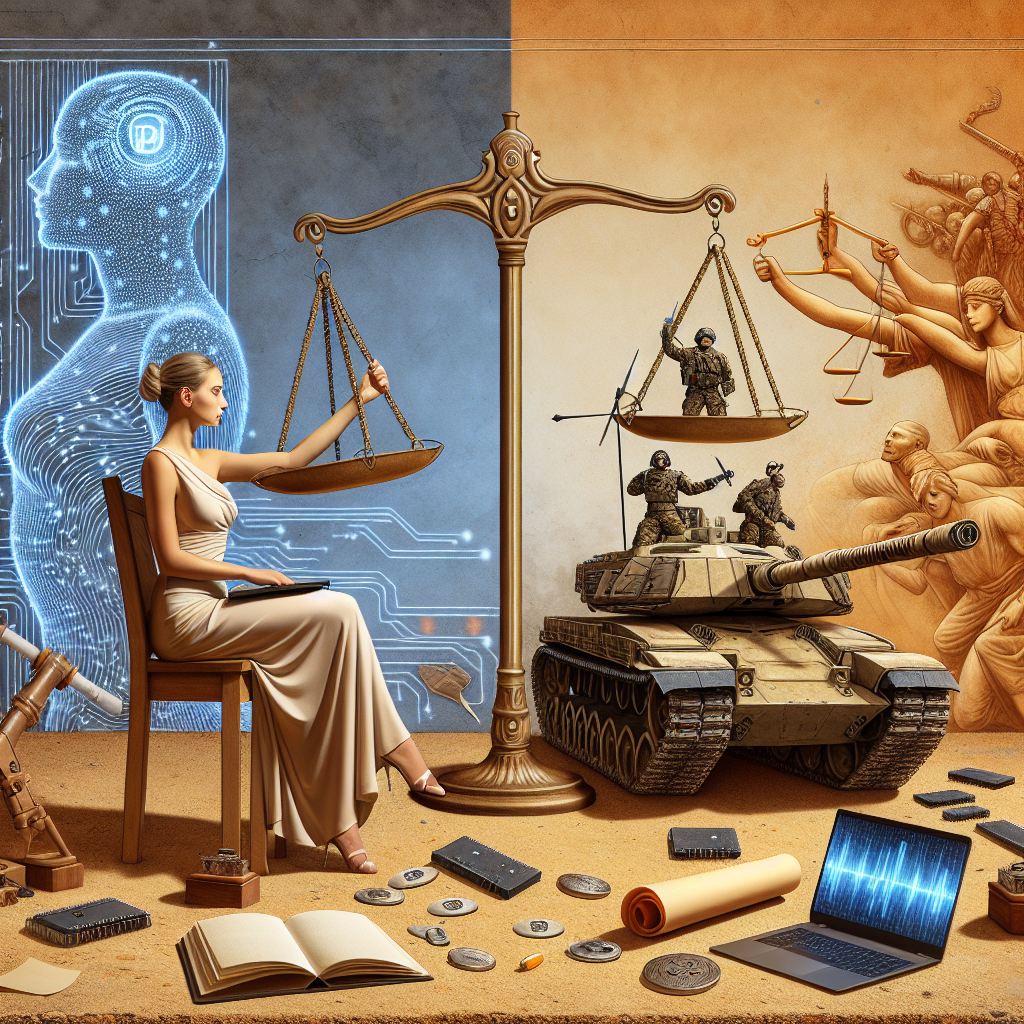

AI Ethics in Modern Warfare Debated

In 1983, a Soviet lieutenant colonel named Stanislav Petrov made a decision that arguably saved the world. Believing that an alert from the early-warning system was a false alarm, he chose not to retaliate against the United States with nuclear force. His reasoning? A human gut feeling that machines can, and do, make mistakes. Fast forward to today, and similar life-or-death calls could soon be made not by humans—but by algorithms. The topic of AI ethics in modern warfare has become a critical concern, as nations race to deploy autonomous weapons systems and intelligent decision-making tools on the battlefield.

The Emergence of AI in Combat

Artificial intelligence in military applications has advanced dramatically over the last decade. From reconnaissance drones to real-time target recognition, AI technologies are streamlining decisions that once required layers of human oversight. The allure is obvious: faster responses, greater accuracy, and fewer soldiers placed in harm’s way. However, these benefits come with ethically fraught challenges.

The increasing use of AI-enabled weapons and surveillance systems raises fundamental questions about accountability, legality, and the human cost of error. For instance:

- Autonomous drones that identify and eliminate targets without direct human involvement.

- AI-powered surveillance tools capable of profiling and monitoring entire populations.

- Predictive algorithms used to assess threats based on incomplete or biased data.

Drawing the Ethical Line

Debates around AI ethics in modern warfare often revolve around one central issue: decision-making autonomy. At what point does removing humans from the loop become a risk rather than an advantage?

Accountability and Decision Making

Unlike traditional weapons, AI systems can act unpredictably, and tracing accountability becomes troublesome. If an autonomous drone misidentifies a civilian as a combatant, who is responsible—the developer, the commander, or the machine itself?

Bias in Algorithms

AI systems learn from data, and that data can be skewed by human prejudice or historical inaccuracies. In warfare, this can lead to deadly mistakes, increasing the risk of collateral damage and undermining ethical military conduct.

International Regulation

The United Nations has already initiated discussions about banning or regulating lethal autonomous weapons. However, enforcing global standards remains complicated, as geopolitical interests often clash. The need for universally accepted ethical frameworks is more urgent than ever.

The Role of Human Oversight

Experts agree that despite the advantages AI brings to modern militaries, humans must remain in charge of life-and-death decisions. Implementing “human-in-the-loop” safeguards ensures that frontline judgments aren’t left solely to lines of code and datasets.

Conclusion: Navigating a Moral Battlefield

As AI continues to redefine combat strategies, the ethical dimensions cannot be treated as afterthoughts. Nations and defense contractors must collaborate with ethicists, international legal bodies, and technologists to establish responsible guidelines. The debate over AI ethics in modern warfare is not just philosophical—it is a matter of global security and human dignity.

For a deeper look into policy recommendations and international discussions on autonomous weapons, visit the official UN Office for Disarmament Affairs.